Talk:Server Lifecycle

Hey, how about using the discussion page :-P

Since we are committing to doing everything from puppet disable to power off in one shot (not in days but in an hour let's say), no point in waiting for the daily storedconfig cron cleanup job based on decomm.pp. So I removed that, and added some comments later about how we don't rely on that mechanism. We want to get away from that anyways since this file is eventually to be tossed.

Fixed order: we remove from puppet manifests etc before we disable puppet on the host. And we can do any of those removal steps and stop in the middle before we commit to the process leading to power off.

In theory one could write a script that did puppet disable/storedconfigs clean/remove cert/remove salt key and do all that with one command. This would make it impossible to back out if there were issues with icinga refresh but if we ensured that there wa always someone who could look at and fix those issues when this script is run, that would be ok. Note that puppet node clean (available from puppet 2.7.10 on) removes the cert and cleans up exported resources, so we should start using it instead of puppetstoredconfigclean.

Comments/complaints/corrections please... -- ariel (talk) 09:48, 4 November 2013 (UTC)

Introducing Netbox

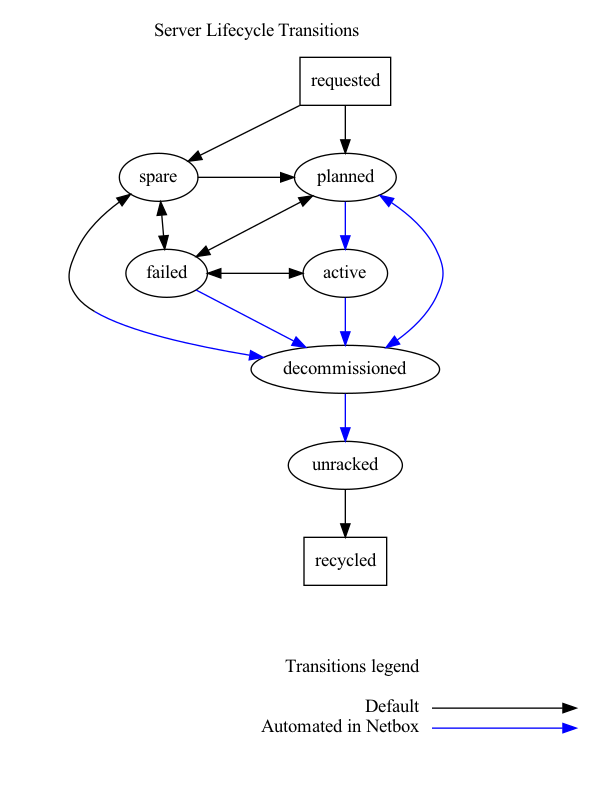

Netbox will replace Racktables, the following one is the proposal to adapt our current Server Lifecycle to the introduction of Netbox

Netbox available statuses

Those are the currently available statuses in Netbox:

DEVICE_STATUS_OFFLINE = 0

DEVICE_STATUS_ACTIVE = 1

DEVICE_STATUS_PLANNED = 2

DEVICE_STATUS_STAGED = 3

DEVICE_STATUS_FAILED = 4

DEVICE_STATUS_INVENTORY = 5

Lifecycle defined statuses

| Lifecycle status | Netbox status |

|---|---|

requested |

none, not yet in Netbox |

spare |

PLANNED

|

staged |

STAGED

|

active |

ACTIVE

|

failed |

FAILED

|

decommissioned |

INVENTORY

|

unracked |

OFFLINE

|

recycled |

none, not anymore in Netbox |

Lifecycle transitions

Only the high level overview is described here, it will be integrated into the page itself.

requested -> spare

- DC Ops receives the shipment to the datacenter (not yet racked)

- DC Ops adds device to Netbox, with status

PLANNED

spare -> staged

- DC Ops racks the device, if not already racked

- DC Ops performs the initial setup (operating system installed, puppet etc.)

- DC Ops assigns a rack position in Netbox, and changes status from

PLANNEDtoSTAGED

staged -> active

- service owner performs acceptance tests and (re)provisions their service

- service owner changes Netbox's status from

STAGEDtoACTIVE

active -> decommissioned

- service owner perform actions to remove it from production

- service owner changes Netbox's status from

ACTIVEtoINVENTORY

active -> staged

This transition should be used when reimaging the first host of a cluster into a newer OS that will likely need to be tested extensively before putting it back in production. It can be used also in other occasions when a rollback of the STAGED -> ACTIVE transition is needed.

- service owner perform actions to remove it from production

- service owner changes Netbox's status from

ACTIVEtoSTAGED

active -> failed

- service owner perform actions to depool it from production

- service owner changes Netbox's status from

ACTIVEtoFAILED

failed -> staged

- DC Ops fixes the hardware failure

- DC Ops changes Netbox's status from

FAILEDtoSTAGED

decommissioned -> spare

- DC Ops wipe power down the host, renaming its hostname to its WMF Asset Tag. (Network switch port is also disabled.)

- DC Ops changes Netbox's status from

INVENTORYtoPLANNED

decommissioned -> staged

This transition is for renames and immediate re-allocations into a different role

- DC Ops wipe and reimage into

role::sparethe host with the new name - DC Ops changes Netbox's status from

INVENTORYtoSTAGED

failed | spare | decommissioned -> unracked

When decommissioning a failed host beyond repair, an old host that was sitting as a spare, normal decommissioning

- DC Ops unracks the device, and removes the row/rack association in Netbox

- DC Ops changes Netbox's status to

OFFLINE - DC Ops places device in the data center's storage unit

- DC Ops adds device to the "Operations tracking" document, in the "unracked decomissioned" sheet [to be automated]

- DC Ops (eventually) communicates this (e.g. on a quarterly basis) to Finance, to be written off the books

unracked -> recycled

- DC Ops ships the device off to a recycler company

- DC Ops adds the device to the "Operations tracking" document, in the "sold-decom servers" sheet and removes it from the "unracked decomissioned" sheet [to be automated]

- DC Ops communicates the device to Finance as a "sold-off server"

- DC Ops deletes the device from Netbox entirely (previously: moves to a decom rack in Racktables)

FAQ

- The spare term is overloaded, as it's used to describe two different points in the lifecycle of a host.

- Host offline & wiped with just the management interface connected and online:

sparelifecycle status - Host online, has puppet role

spare::system:stagedlifecycle status and normal hostname. These hosts are either about to be pushed into service, or are in the process of being removed from service.

- Host offline & wiped with just the management interface connected and online:

- Renames:

active -> decommissioned -> stagedwith the new name, skipping thesparestatus - Decommission of a production host back into the spare pool: it's a rename to its WMF asset tag.

- Relationship between racked/unracked, power on/off and their Netbox status:

| Netbox status | Racked | Power |

|---|---|---|

PLANNED |

yes or no | off |

STAGED |

yes | on |

ACTIVE |

yes | on |

FAILED |

yes | on or off |

INVENTORY |

yes | on |

OFFLINE |

no | off |

Open questions

- Power on/off status: if needed we could add a custom field to Netbox to track the power status, not sure it's worth though and can be added at a later stage too.

- Should the wipe+reimage process be done on

active -> decommissionedby the service owner instead of doing it in the next step? - When we want to start to track IPs and VLANs association in Netbox

Changes to be made to the page sections

This is a minimal list of required changes to be made to this wiki page to include the Netbox steps. We can then restructure the page to follow the above lifecycle statuses.

- Server states

- Requested: kept as is

- Existing System Allocation: to be split into the

DECOMMISSION -> STAGEDandSTAGED -> ACTIVE, as it is right now is basically only the latter transition. - Ordered: kept as is

- Post order: kept as is

- Receiving Systems On-Site: change insert into Racktables with insert into Netbox with status

PLANNED. If the hostname is not available at this time, insert it without an hostname, the asset tag has it's own field. - Racked: change update Racktables to update Netbox with the hostname (if it was inserted without) and rack location

- Installation: if the hostname is chosen at this time, update Netbox with it.

- In Service: kept as is

- Reinstallation: add a note for the

ACTIVE -> STAGEDtransition to put the host in Netbox inSTAGEDif needed (not for normaldepool -> reimage -> pooltransitions). - Reclaim to Spares OR Decommission

- Steps for ANY Opsen: add change Netbox status from

ACTIVEreimage intospare::systemkeeping the systems current hostname (unless already re-assigned to a different name/role). - Steps for DC-OPS (with network switch access): add change Netbox status from

INVENTORYtoOFFLINE, wipe disks, rename host to WMF asset tag in netbox and on disabled network switch port.

- Steps for ANY Opsen: add change Netbox status from

- wmf-auto-reimage: kept as is (an CLI option could be added later on to the script to automatically change Netbox status on reimages that need to be put in

STAGED). - Server reimage + rename: add

active -> decommissioned -> stagedstatus changes - Position Assignments: kept as is

- See also: kept as is

Stages

The documentation here is not showing realitiy- the stages on netbox are not the same as the ones mentioned here. It would be nice whoever maintains netbox or this docs to review it and sync it.

My other request is to explain a bit more the stages on the initial paragraph. Active is more or less clear, but there are several stages of "inactivity" that aren't 100% clear to me. ---- Jcrespo 14:47, 5 June 2019 (UTC)